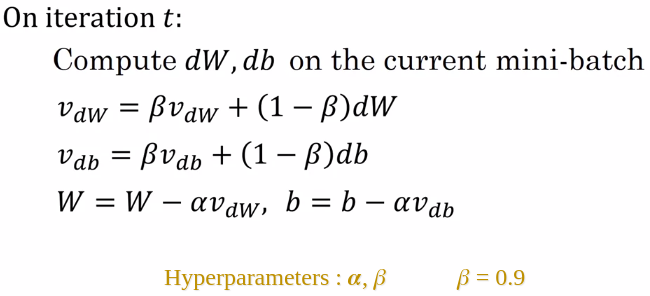

Gradient Descent with momentum works faster than the standard Gradient Descent algorithm. The basic idea of the momentum is to compute the exponentially weighted average of gradients over previous iterations to stabilize the convergence and use this gradient to update the weight and bias parameters.

Let’s first understand what is an exponentially weighted average.

Exponentially Weighted Average

An exponentially weighted average is also known as moving average in statistics. The general mathematical equation of exponentially weighted average is:

![]()

This equation indicates that giving more weighted to the previous value and smaller weight to the current value.

Implementation: