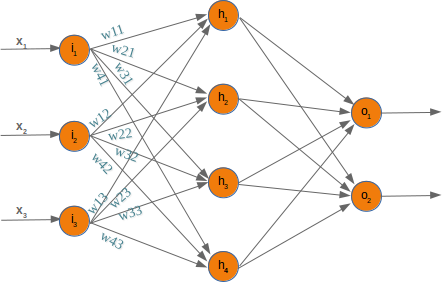

Neural Network has a more sophisticated neurone structure similar to our brain. Neural Network is the mathematical function which transfers input variables to the target variable. Neural Network is consists of the following components:

- An Input layer

- An arbitrary number of Hidden layer

- An Output layer

- A set of weight and bias between each layer

- An activation function for each hidden layer

Let’s see the simple 2-layer Neural Network structure in the following image with a single hidden layer. Note that the input layer is not considered when we count the layers in the neural network.

The number of neuron in the input layer is equal to the number of features in the training dataset. And, the number of neuron in output layer depends on the task.

If the task is binary classification, the output layer contains two neurons. If it is a regression task, the output layer contains only a single neuron. For the multi-class classification problem, the output layer contains the number of neuron same as the number of classes.

Here, below architecture of the Neural Network is for 3 input features called x1, x2 and x3. Hence, there is 3 neuron exist in an input layer and 4 neurons in the hidden layer.

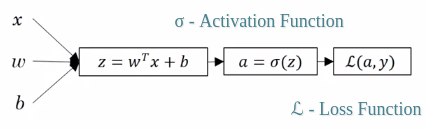

A Neural Network is consist of two procedure such as Forward propagation and Back-propagation.

- Forward propagation is also known as feedforward, which used to predict the output variable.

- Back-propagation method used to update the weight and bias of each layer to minimize the loss function.

In the Forward propagation phase, each node corresponds to the two steps calculation. The first step needs to calculate the z value which is a linear function of weight and bias coefficient. And in the second step applies activation function to z-value which calculates activation value is the final output of the neuron.

The activation function used to learn the non-linear patterns between inputs and target output variable. There are several activation functions are used to train the Neural Network. You can learn more about activation function in this tutorial.

Forward propagation

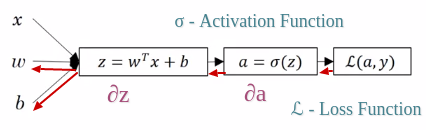

In the Back-propagation phase, calculate the derivation of z-value and a-value at each node to update the weight and bias parameter using the gradient descent method. In the below figure the red arrow define the procedure of the back-propagation.

Weight & bias Updation : w = w – ? ?z & b = b – ? ?z ( ? is learning rate )

Back-propagation

. . .