Support vector machine is one of the famous supervised learning algorithm in Machine Learning. The concept of SVM is really very simple. SVM generally used for classification and regression problem also, sometimes used for outlier detection. The main objective of the support vector machine is to find the N-dimensional hyperplane which can distinguish N-dimensional data points.

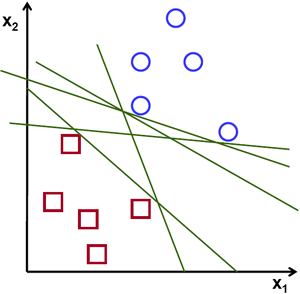

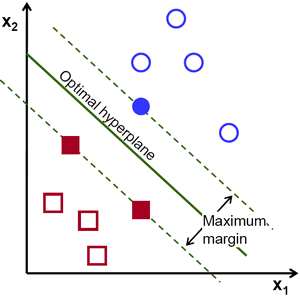

For example in 2-dimensional data space, there are many hyperplanes exists which can differential data points. But our goal must be to find the hyperplane with the maximum margin to both classes.

|

|

The dimension of the hyperplane depends on the dimension of input features. If the input features are two, then hyperplane is a line. If the input features are 3, then hyperplane is a 2-Dimensional plane.

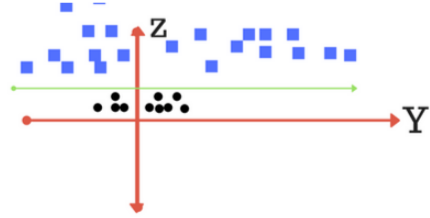

Let’s see a bit difficult example:

Can you draw separation line in the below data-points? It is clearly shown that no separation line exists to separate the two classes in XY plane. Here, we can apply some transformation to make the datapoint distinguishable. That is Z2 = X2+Y2.

|

|

Scikit-Learn library provides the implementation of the Support Vector Machine algorithm.

. . .

Kernel Functions

Support Vector Machine algorithms use a set of a mathematical function that is defined as the kernel. SVM provides 4 types of the kernel function. The kernel function returns the inner product of the two points. These different Kernel functions are:

. . .

Classification

SVM provides various kinds of methods with different mathematical formulation for the classification problem. Here, SVC stands for Support Vector Classification.

1) SVC : C-Support Vector Classification

SVC uses the parameter C as the penalty parameter of the error term. The default value of parameter C is 1.0.

2) NuSVC : Nu-Support Vector Classification

NuSVC is similar to SVC but uses a new parameter nu which controls the number of support vectors and training errors. The parameter nu is an upper bound on the fraction of training errors and a lower bound of the fraction of support vectors. The value of nu should be in the interval (0,1]

3) LinearSVC : Linear Support Vector Classification

LinearSVC is Similar to SVC with parameter kernel=’linear’. Note that LinearSVC does not accept keyword kernel, as this is assumed to be linear.

Multi-class Classification

SVC and NuSVC implement the “one-vs-one” strategy for multi-class classification. The n_class * (n_class – 1) / 2 classifiers are constructed and each one trains data from two classes. where n_class represents the number of classes. On the other hand, LinearSVC implements the “one-vs-rest” approach for multi-class classification.

. . .

Regression

SVM provides different methods to solve the regression problem. This method is called Support Vector Regression (SVR). There are three different implementations of SVR:

1) SVR : Support Vector Regression

SVR uses parameters C and epsilon. The parameter C used as the penalty parameter of the error term. An epsilon parameter specifies the epsilon-tube within which no penalty is associated in the training loss function with points predicted within a distance epsilon from the actual value.

2) NuSVR : Nu Support Vector Regression.

NuSVR is simar to NuSVC but uses a new parameter nu which controls the number of support vectors and training errors for regression. However, unlike NuSVC, where nu replaces C, here nu replaces the parameter epsilon of epsilon-SVR. The parameter nu is an upper bound on the fraction of training errors and a lower bound of the fraction of support vectors. The value of nu should be in the interval (0,1]

3) LinearSVR : Linear Support Vector Regression

LinearSVR is similar to SVR with parameter kernel=’linear’. Note that LinearSVR does not accept keyword kernel, as this is assumed to be linear.

. . .